UiPath Specialized AI Associate Exam (2023.10) 온라인 연습

최종 업데이트 시간: 2025년10월10일

당신은 온라인 연습 문제를 통해 UiPath UiPath-SAIAv1 시험지식에 대해 자신이 어떻게 알고 있는지 파악한 후 시험 참가 신청 여부를 결정할 수 있다.

시험을 100% 합격하고 시험 준비 시간을 35% 절약하기를 바라며 UiPath-SAIAv1 덤프 (최신 실제 시험 문제)를 사용 선택하여 현재 최신 248개의 시험 문제와 답을 포함하십시오.

정답:

Explanation:

A label taxonomy is a hierarchical structure of concepts that you want to capture from your communications data, such as emails, chats, or calls. Each label represents a specific concept that serves a business purpose and is aligned to your objectives. A label taxonomy can have multiple levels of hierarchy, where each child label is a subset of its parent label. For example, a parent label could be “Product Feedback” and a child label could be “Product Feature Request” or “Product Bug Report”. A label taxonomy is used to train a machine learning model that can automatically classify your communications data according to the labels you defined1.

One of the best practices for designing a label taxonomy is to ensure that each label is clearly identifiable from the text of the individual verbatim (not thread) to which it will be applied. A verbatim is a single unit of communication, such as an email message, a chat message, or a call transcript segment. A thread is a collection of related verbatims, such as an email conversation, a chat session, or a call recording. When you train your model, you will apply labels to verbatims, not threads, so it is important that each label can be recognized from the verbatim text alone, without relying on the context of the thread. This will help the model to learn the patterns and features of each label and to generalize to new data. It will also help you to maintain consistency and accuracy when labelling your data2.

Reference: 1: Communications Mining - Taxonomies 2: Communications Mining - Label hierarchy and best practice

정답:

Explanation:

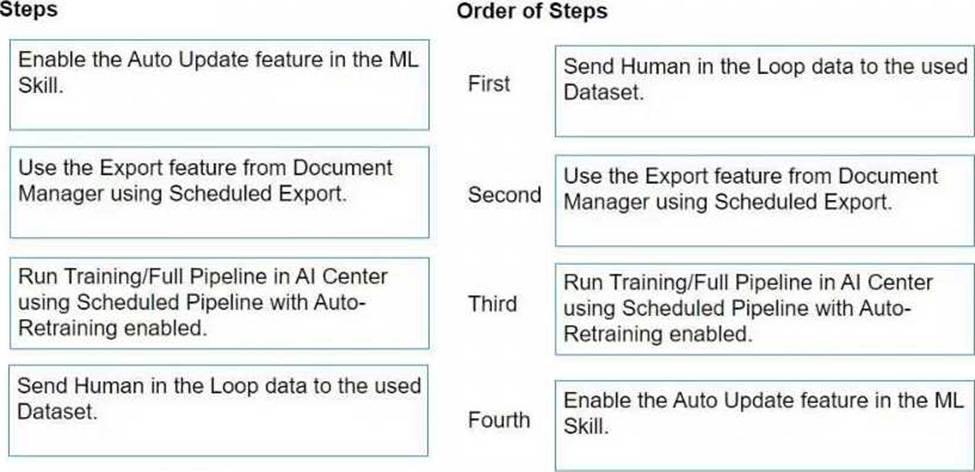

To automatically retrain and deploy a Document Understanding Machine Learning (ML) Model in AI Center with data from the Document Validation Action, the steps should be followed in this order: Send Human in the Loop data to the used Dataset.

This step involves sending the data that has been validated and corrected by human reviewers to the dataset. This data will be used for training the ML model.

Use the Export feature from Document Manager using Scheduled Export.

After the data is reviewed and validated, it needs to be exported from the Document Manager. Scheduled Export automates this process, ensuring the dataset in AI Center is regularly updated with new data.

Run Training/Full Pipeline in AI Center using Scheduled Pipeline with Auto-Retraining enabled. With the updated data in the dataset, the next step is to run the training or the full pipeline. The use of Scheduled Pipeline with Auto-Retraining ensures that the ML model is automatically retrained with the latest data.

Enable the Auto Update feature in the ML Skill.

Finally, enabling the Auto Update feature in the ML Skill ensures that the newly trained model is automatically deployed, making the improved model available for document understanding tasks. Following these steps in the specified order allows for a streamlined process of continuously improving the ML model based on human-validated data, ensuring better accuracy and efficiency in document understanding tasks over time.

정답:

Explanation:

According to the UiPath documentation, the recommended number of documents per vendor to train the initial dataset is 10. This means that for each vendor that provides a specific type of document, such as invoices or receipts, you should have at least 10 samples of their documents in your training dataset. This helps to ensure that the dataset is balanced and representative of the real-world data, and that the machine learning model can learn from the variations and features of each vendor’s documents. Having too few documents per vendor can lead to poor model performance and accuracy, while having too many documents from a single vendor can cause overfitting and bias1.

Reference: 1: Document Understanding - Training High Performing Models

정답:

Explanation:

The Reports section of the dataset navigation bar in UiPath Communication Mining allows users to access detailed, quervable charts, statistics, and customizable dashboards that provide valuable insights and analysis on their communications data1. The Reports section has up to six tabs, depending on the data type, each designed to address different reporting needs2:

Dashboard: Users can create custom dashboard views using data from other tabs, such as label summary, trends, segments, threads, and comparison. Dashboards are specific to the dataset and can be edited, deleted, or renamed by users with the ‘Modify datasets’ permission3.

Label Summary: Users can view high-level summary statistics for labels, such as volume, precision, recall, and sentiment. Users can also filter by data type, source, date range, and label category. Trends: Users can view charts for verbatim volume, label volume, and sentiment over a selected time period. Users can also filter by data type, source, date range, and label category.

Segments: Users can view charts comparing label volumes to verbatim metadata fields, such as sender domain, channel, or language. Users can also filter by data type, source, date range, and label category.

Threads: Users can view charts of thread volumes and label volumes within a thread, if the data is in thread form, such as call transcripts or email chains. Users can also filter by data type, source, date range, and label category.

Comparison: Users can compare different cohorts of data against each other, such as different sources, time periods, or label categories. Users can also filter by data type, source, date range, and label category.

Reference: 1: Communications Mining - Using Reports 2: Communications Mining - Reports 3: Communications Mining - Using Dashboards: [Communications Mining - Using Label Summary]: [Communications Mining - Using Trends]: [Communications Mining - Using Segments]: [Communications Mining - Using Threads]: [Communications Mining - Using Comparison]

정답:

Explanation:

According to the UiPath documentation and web search results, OCR (Optical Character Recognition) is a method that reads text from images, recognizing each character and its position. OCR is used to digitize documents and make them searchable and editable. OCR can be performed by different engines, such as Tesseract, Microsoft OCR, Microsoft Azure OCR, OmniPaqe, and Abbyy. OCR is a basic step in the Document Understanding Framework, which is a set of activities and services that enable the automation of document processing workflows.

IntelligentOCR is a UiPath Studio activity package that contains all the activities needed to enable information extraction from documents. Information extraction is the process of identifying and extracting relevant data from documents, such as fields, tables, entities, and labels. IntelligentOCR uses different components, such as classifiers, extractors, validators, and trainers, to perform information extraction. IntelligentOCR also supports different formats, such as PDF, PNG, JPG, TIFF, and BMP. IntelligentOCR is an advanced step in the Document Understanding Framework, which builds on the OCR output and provides more functionality and flexibility.

Reference: About the IntelligentOCR Activities Package

OCR Activities

OCR Feature Comparison: Uipath Community vs Uipath Licensed OCR Document Understanding - Introduction

정답:

Explanation:

According to the UiPath Communications Mining documentation, coverage is one of the four main factors that contribute to the model rating, which is a holistic measure of the model’s performance and health. Coverage assesses the proportion of the entire dataset that has informative label predictions, meaning that the predicted labels are not generic or irrelevant. Coverage is calculated as the percentage of verbatims (communication units) that have at least one informative label out of the total number of verbatims in the dataset. A high coverage indicates that the model is able to capture the main topics and intents of the communications, while a low coverage suggests that the model is missing important information or producing noisy predictions.

Reference: Communications Mining - Understanding and improving model performance Communications Mining - Model Rating

Communications Mining - It’s All in the Numbers - Assessing Model Performance with Metrics

정답:

Explanation:

The Configure Extractors Wizard is a tool that enables you to select and customize the extractors that are used for data extraction from documents. It is accessed via the Data Extraction Scope activity, which is a container for extractor activities. The wizard allows you to map the fields defined in your taxonomy with the fields supported by each extractor, and to set the minimum confidence level and the framework alias for each extractor. The wizard is mandatory for the extractor configuration, as it ensures that the extractors are applied correctly to each document type and field1.

Reference: Configure Extractors Wizard of Data Extraction Scope

정답:

Explanation:

According to the UiPath documentation, a data source is a raw collection of verbatims, which are text-based communications such as survey responses, emails, transcripts, or calls1. A data source can be of a similar type and share a similar intended purpose, such as capturing customer feedback or servicing requests2. A data source can be added to up to 10 different datasets, which are collections of sources and labels that are used to train and evaluate ML models3. Therefore, the correct definition of a UiPath Communications Mining data source is A.

Reference: 1: Communications Mining - Sources 2: Communications Mining - Managing Sources and Datasets 3: Communications Mining - Understanding the data structure and permissions

정답:

Explanation:

Recall is a metric that measures the proportion of all possible true positives that the model was able to identify for a given concept1. A true positive is a case where the model correctly predicts the presence of a concept in the data. Recall is calculated as the ratio of true positives to the sum of true positives and false negatives, where a false negative is a case where the model fails to predict the presence of a concept in the data. Recall can be interpreted as the sensitivity or completeness of the model for a given concept2. For example, if there are 100 verbatims that should have been labelled as ‘Request for information’, and the model detects 80 of them, then the recall for this concept is 80% (80 / (80 + 20)). A high recall means that the model is good at finding all the relevant cases for a concept, while a low recall means that the model misses many of them.

Reference: 1: Recall 2: Precision and Recall

정답:

Explanation:

According to the UiPath documentation portal1, the Document Understanding Process is a fully functional UiPath Studio project template based on a document processing flowchart. It provides logging, exception handling, retry mechanisms, and all the methods that should be used in a Document Understanding workflow, out of the box. The Document Understanding Process is preconfigured with a series of basic document types in a taxonomy, a classifier configured to distinguish between these classes, and extractors to showcase how to use the Data Extraction capabilities of the framework. It is meant to be used as a best practice example that can be adapted to your needs while displaying how to configure each of its components1. The Document Understanding Framework, on the other hand, is a set of activities that can be used to build custom document processing workflows. The framework facilitates the processing of incoming files, from file digitization to extracted data validation, all in an open, extensible, and versatile environment. The framework enables you to combine different approaches to extract information from multiple document types. The framework consists of several components, such as Taxonomy, Digitization, Classification, Data Extraction, Data Validation, and Data Consumption2. Therefore, option D is the correct answer, as it describes the difference between the Document Understanding Process and the Document Understanding Framework.

Reference: 1 Document Understanding Process: Studio Template 2 Document Understanding - Introduction

정답:

Explanation:

The OOB labeling template is a predefined template that you can use to label your text data for entity recognition models. The template comes with some preset labels and text components, but you can also add your own labels using the General UI or the Advanced Editor.

When you add an entity label, you need to fill in the following information:

Name: the name of the new label. This is how the label will appear in the labeling tool and in the exported data.

Input to be labeled: the text component that you want to label. You can choose from the existing text components in the template, such as Date, From, To, CC, and Text, or you can add your own text components using the Advanced Editor. The text component determines the scope of the text that can be labeled with the entity label.

Attribute name: the name of the attribute that you want to extract from the text. You can use this to create attributes such as customer name, city name, telephone number, and so on. You can add more than one attribute for the same label by clicking on + Add new.

Shortcut: the hotkey that you want to assign to the label. You can use this to label the text faster by using the keyboard. Only single letters or digits are supported.

Color: the color that you want to assign to the label. You can use this to distinguish the label from the others visually.

Reference: AI Center - Managing Data Labels, Data Labeling for Text - Public Preview

정답:

Explanation:

The Data Extraction Scope activity provides a scope for extractor activities, enabling you to configure them according to the document types defined in your taxonomy. The output of the activity is stored in an Extraction Result variable, containing all automatically extracted data, and can be used as input for the Export Extraction Results activity. This activity also features a Configure Extractors wizard, which lets you specify exactly what fields from the document types defined in the taxonomy you want to extract1.

The extractors that can be used for Data Extraction Scope activity are:

Regex Based Extractor: This extractor enables you to use regular expressions to extract data from text documents. You can define your own expressions or use the predefined ones from the Regex Based Extractor Configuration wizard2.

Form Extractor: This extractor enables you to extract data from semi-structured documents, such as invoices, receipts, or purchase orders, based on the position and relative distance of the fields. You can define the templates for each document type using the Form Extractor Configuration wizard3.

Intelligent Form Extractor: This extractor enables you to extract data from semi-structured documents, such as invoices, receipts, or purchase orders, based on the labels and values of the fields. You can define the fields for each document type using the Intelligent Form Extractor Configuration wizard.

Machine Learning Extractor: This extractor enables you to extract data from any type of document, using a machine learning model that is trained on your data. You can use the predefined models from UiPath or your own custom models hosted on AI Center or other platforms. You can configure the fields and the model for each document type using the Machine Learning Extractor Configuration wizard.

Reference: 1: Data Extraction Scope 2: Regex Based Extractor 3: Form Extractor: Intelligent Form Extractor: Machine Learning Extractor

정답:

Explanation:

The ML Extractor is a data extraction tool that uses machine learning models provided by UiPath to identify and extract data from documents. The ML Extractor can work with predefined document types, such as invoices, receipts, purchase orders, and utility bills, or with custom document types that are trained using the Data Manager and the Machine Learning Classifier Trainer12.

According to the best practice, the ML Extractor should be chosen based on the document types, language, and data quality of the documents being processed. It is important to select an ML Extractor that is specifically trained or optimized for the document types that are relevant for the use case, as different document types may have different layouts, fields, and formats. It is also important to take into account the language of the documents, as some ML Extractors may support only certain languages or require specific language settings. Moreover, it is important to consider the quality and diversity of the training data used to train the ML Extractor, as this may affect the accuracy and reliability of the extraction results. The training data should be representative of the real-world data, and should cover various scenarios, variations, and exceptions3.

Reference: 1: Machine Learning Extractor - UiPath Activities 2: Machine Learning Classifier Trainer - UiPath Document Understanding 3: Data Extraction - UiPath Document Understanding

정답:

Explanation:

According to the UiPath documentation, the Classify stage of the Document Understanding Framework is used to automatically determine what document types are found within a digitized file. The document types are defined in the project taxonomy, which is a collection of all the labels and fields applied to the documents in a dataset. The Classify stage uses one or more classifiers, which are algorithms that assign document types to files based on their content and structure. The classifiers can be configured and executed using the Classify Document Scope activity, which also allows for document type filtering, taxonomy mapping, and minimum confidence threshold settings. The Classify stage outputs the classification information in a unified manner, irrespective of the source of classification. The documents that are classified are then sent to the next stage of the framework, which is Data Extraction. The documents that are not classified or skipped are either excluded from further processing or sent to Action Center for human validation and correction.

Reference: Document Understanding - Document Classification Overview Document Understanding - Introduction

Generative Extraction & Classification using Document Understanding in Cross-Platform Projects (Public Preview)

정답:

Explanation:

According to the UiPath Communications Mining documentation, reviewing and applying entities is a crucial step for improving the accuracy and performance of the entity extraction models. When reviewing entities, users should check all of the predicted entities within a paragraph, as well as any missing or incorrect ones. Users can accept, reject, edit, or create entities using the platform’s interface or keyboard shortcuts. Users can also change the entity type if the value is correct but the type is wrong. Reviewing and applying entities helps the platform learn from the user feedback and refine its predictions over time. It also helps users assess the automation potential and benefit of the communications data.

Reference: Communications Mining - Reviewing and applying entities Communications Mining - Improving entity performance